Developing a custom experiment orchestrator to solve complex modeling problems.

In this post we will explore why experiment orchestration is important, existing orchestration solutions, how to build your own orchestrator with MongoDB, and why that might be beneficial in some use cases.

Who is this useful for? Anyone trying to fit models to data; and consequently needs a way to organize those experiments.

How advanced is this post? The idea of orchestration is fairly simple, and is accessible to virtually any skill level. The example should be accessible to backend developers or data scientists trying to branch out.

Pre-requisites: A working understanding of core networking principles, like databases and servers, as well as core data science concepts, like hyperparameters.

Code: Full code can be found here. Note: this repo is a WIP at the time of writing this article.

What is Experiment Orchestration?

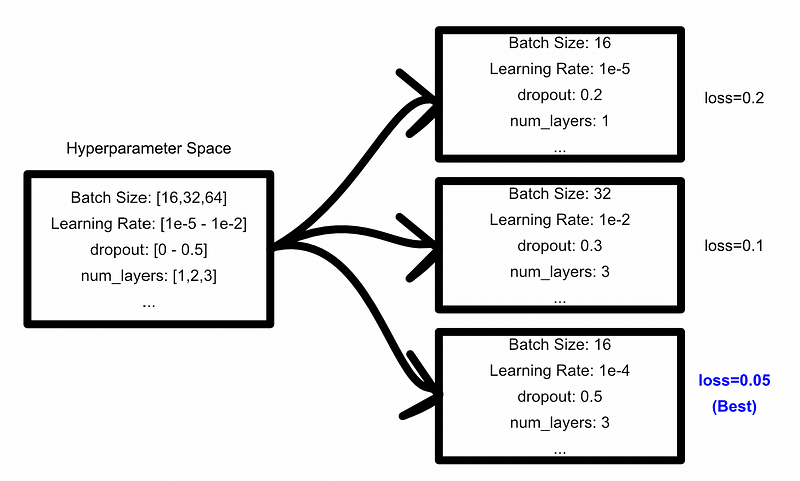

By “experiment orchestration” I am referring to a wide number of tasks consisting of the same general concept. The most common form of experiment orchestration is hyperparameter sweeps: where, given some range of hyperparameter values, you want to sweep through that range and find the best hyperparameter set for a given modeling problem. The organization of these types of planned experiments is generally referred to as orchestration.

Simple sweeps get the job done most of the time, but as modeling problems become more complex it is common to have more complex experiments. You may find yourself needing to experiment with multiple model types, each with their own hyperparameter space, across multiple datasets.

For instance, I’m currently conducting research on the performance of different modeling strategies within non-homogenous modeling applications. I’m not interested in “what is the best set of hyperparameters to solve a particular problem”, but rather “how do multiple model types, each with their own hyperparameter space, perform across numerous classification and regression tasks”.

The goal of an experiment orchestrator is to function as a central hub of the experiment, no matter how complex the experiment’s definition is, such that a single worker, or perhaps a group of workers, can run sub-sets of the experiment.

We will be building a system like this using MongoDB Data Services to store results, and MongoDB Application Services as the server which hosts the logic and networking of the system. While this is incredibly powerful, it’s also incredibly simple; I had the whole thing tied up over a single weekend.

What Solutions Exist?

Weights and Biases is an obvious choice.

For W&B Sweeps, you define a sweep, an agent (training and validation code), and log results while the agent is running. Those steps are pretty straightforward, and look something like this:

Defining A Sweep

"""Telling W&B what hyperparameter space I want to explore"""parameters_dict = { 'optimizer': { 'values': ['adam', 'sgd'] }, 'fc_layer_size': { 'values': [128, 256, 512] }, 'dropout': { 'values': [0.3, 0.4, 0.5] }, }sweep_config['parameters'] = parameters_dict

2. Defining an Agent

"""defining a model which works based off of the hyperparmeters"""#gets a configuration from the orchestratorconfig = wandb.configloader = build_dataset(config.batch_size)network = build_network(config.fc_layer_size, config.dropout)optimizer = build_optimizer(network, config.optimizer, config.learning_rate)

3. Log Results

"""training a model and logging the results"""for epoch in range(config.epochs): avg_loss = train_epoch(network, loader, optimizer) wandb.log({"loss": avg_loss, "epoch": epoch})

This is sufficient for 90% of use cases, and is the generally recommended approach for experiment orchestration. This approach didn’t work with my use case, however. The following section presents my solution.

The Case for Building Your Own Orchestrator

Again for 90% of applications (especially business applications) the method described above is more than adequate. Relying on an existing system means relying on robustness and feature maturity which surpasses any feasible quick and dirty solution.

That said, systems like W&B seem designed to find “solutions”. They operate under the assumption that you have a specific dataset and want to explore solutions with the objective of finding a specific solution which is best for that dataset. For me, and my research needs, managing things like multiple datasets, multiple models, and the compatibility between the two, was frustratingly cumbersome in W&B.

to use W&B it seemed like I would have to build an orchestrator of orchestrators by somehow organizing and managing multiple sweeps across multiple datasets. On top of an already complex task, I would need to deal with integration issues as well. It was around this point I decided that building W&B sweeps from scratch, paired with a few minor modifications for my needs, would be the best fit.

Building a Custom Orchestrator in a Weekend

I implemented an orchestrator for my specific problem. While the solution is problem specific, the general idea should be flexible to most machine learning experimentation needs.

Defining The Problem

I have a collection of around 45 tabular datasets across a variety of domain spaces. Each of these datasets can be considered a “task” that any given model could be good or bad at. some tasks might be regression tasks, while others might be classification tasks.

The general idea is to build an orchestrator which can manage the application of a family of models to a family of datasets. This orchestrator should aggregate these results for further analysis.

Also, naturally, the objective of this orchestrator is to solve problems, not become a problem. The idea is to cut some corners where I can while solving the problems that I have. As a result, this solution is pretty bare-bones, and a bit hacky.

Choice of Technology

For this solution I used MongoDB Application Services and MongoDB Data Service, or whatever it’s called. MongoDB has been going through a lot of re-branding in the last year. The system I’m using used to be called MongoDB Atlas and Realm, but now Realm might be part of Atlas? I’m not sure.

Regardless, MongoDB on the cloud is essentially a backend in a box. You can set up a database, application layer, and api layer super quickly with minimal overhead. In my experience, because of confusing documentation, getting something production ready can be an uphill battle. However, for rapid prototyping of backend resources, I have yet to find a better alternative.

The next few sections describe how I broke the problem of orchestration into Experiments and Runs, and what those practically look like.

Defining an Experiment

In this custom approach I essentially co-opted W&B’s design of sweeps and added some of my own ideas. The core system runs on an “Experiment”, which describes models, hyperparameter spaces, datasets, and how the three should be associated together.

"""An example of an experiment definition. Each "experiment" has three key fields: - data_groups: groups identifiers of datasets - model_groups: groups identifiers of models - applications: which model_groups should apply to which data_groupsThis approach thinks of a model as two things: - a unique identifier, which references some model definition - a hyperparameter space associated with that model."runs_per_pair" defines how often a certain association should be run. Forinstance "test model X's hyperparameters on dataset Y 10 times"."""{ "name": "testExp0", "runs_per_pair": "10", "definition": { "data_groups": { "group0": [ "dataUID0", "dataUID1", "dataUID2" ], "group1": [ "dataUID3", "dataUID4", "dataUID5" ] }, "model_groups": { "model0": { "model": "modelUID0", "hype": { "learning_rate": {"distribution": "log_uniform", min:0.0, max:2.5}, "layers": {"distribution":"int_uniform", min:0, max:2} } }, "model1": { "model": "modelUID1", "hype": { "learning_rate": {"distribution": "log_uniform", min:0.0, max:2.5} } } }, "applications": { "group0": [ "model0" ], "group1": [ "model0", "model1" ] } }}

This experiment then gets broken down into a list of tasks: individual explorations which some worker needs to run. These are done by looking through all associations and listing all model/dataset pairs.

This all gets created by calling the “/registerExperiment” api endpoint, and passing it the model definition.

I opted to make experiments “declarative”, kind of like a terraform script if you’re familiar with that. When you register an experiment you either create a new one, or get an existing one, on a experiment-name basis. That way you can use the same script on multiple workers. The first one will create the experiment, and others will simply use the one which has already been created. (or, at least that’s the idea. You have to be careful about race conditions with this line of thinking).

Runs

Now that the experiment has been defined, along with individual model/task pairs which need to be run, we can start runs. This is where the rubber meets the road for the orchestrator. We have to:

Decide which model/task pair a worker should work on

2. Get hyperparameters from that models hyperparameter space for the worker to use

3. Record ongoing results

4. Manage runs which have been completed (separating those which might have failed).

The “run” construct exists within the orchestrator to record this information.

A run is directly associated with a model/task pair (mtpair), the experiment that mtpair exists in, who created the experiment, the model, the task, the specific hyperparameter space point, and the logged results on a per-epoch basis. This is done with the “/beginRun”, “/updateRun”, and “/endRun” endpoints.

/beginRun looks at all the existing runs and creates a new run on the mtpair with the least amount of completed and initated runs. /beginRun, after deciding which model-task pair to prioritize, uses random search to convert that models hyperparameter space into a specific set of hyperparameters. It then passes a handler for that run.

/updateRun allows you to register metrics on a per-epoch basis. Every epoch you call /updateRun and pass a dictionary of metrics for that run. Those can be pretty much whatever the user feels is appropriate.

/endRun does a few quality of life things. ended runs can’t be continued, so it allows the code to declare when a run has been completed. It also updates the record for the run within the experiment, and marks the run as successfully completed. Runs which unexpectedly fail will not be marked as ended, and thus implimenting this webhook allows the orchestrator to be tollerant of faulty workers.

Security

This system uses JSON web tokens (JWTs) to create some rudamentary authentication. Realistically the risk profile of a project like this, from a research perspective, is pretty low. That said this system does validate API tokens associated on a per-user basis, and provides some security measures to ensure data integrity while allowing for collaboration.

Also, in terms of security for my wallet, I’m using the free tier and got that set up without needing to register a payment method. (MongoDB on the cloud is quirky, but it really is pretty amazing for prototyping)

And that’s it!

Normally I include code, but it’s a whole repo which would kind of be a slog to put in an article. Again, if you want to check out the repo you can look here. Specifically, you can look at the function definitions here, which are essentially the whole thing in a nutshell.

Updates

I added a webhook called beginRunSticky which begins a new run, but accepts a dataset to be “stuck” to. It prioritizes giving workers a new run with the specified task, thus allowing multiple runs to execute without needing to load a new dataset.

Follow For More!

In future posts, I’ll also describing several landmark papers in the ML space, with an emphasis on practical and intuitive explanations.

Attribution: All of the images in this document were created by Daniel Warfield, unless a source is otherwise provided. You can use any images in this post for your own non-commercial purposes, so long as you reference this article, https://danielwarfield.dev, or both.