How the study of metals influenced AI at it’s core.

In some ways, you can frame nearly every problem as a complex function with some number of inputs and some outputs which need to be optimized. Because of the obvious utility of the subject, people have thought a lot about optimization across a plethora of disciplines. Two notable examples are artificial intelligence and metallurgy.

Metallurgical Optimization

When high-carbon metal is heated to extreme temperatures, the molecules within the structure of the metal are allowed to move more freely, like a putty.

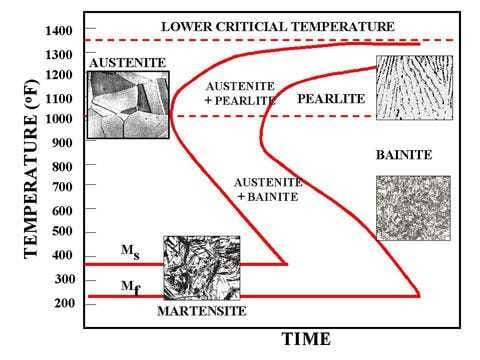

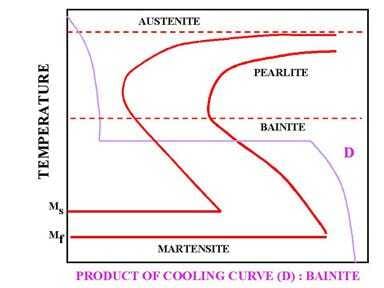

As the metal cools below a certain threshold, the metal begins to crystalize. This process happens spontaneously at different nucleation sites. The final grain structure and it’s characteristics have a massive impact on the final mechanical performance of the metal

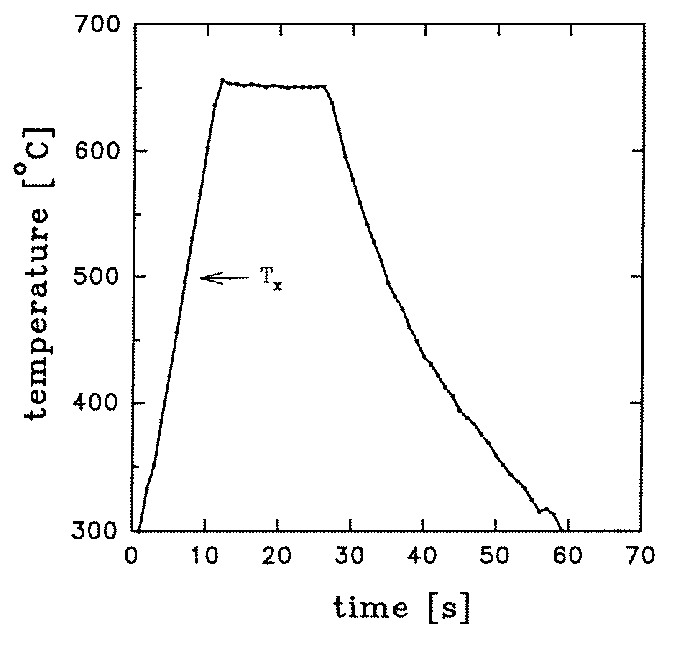

In order to create a final product with some desired mechanical properties, metals are cooled via a schedule. The nature of this schedule might result in metals which are hard or soft, stable, or containing internal stressors which might lead to brittle failure.

When a metal has too many internal stressors, points between crystalline structures which cause internal pressures, a common strategy is to anneal the metal. Annealing is the process of heating and slowly cooling the metal to allow some amount of motion within the internal structure. This allows internal adjustments to slowly relax away internal stressors.

How Metallurgy Impacted AI

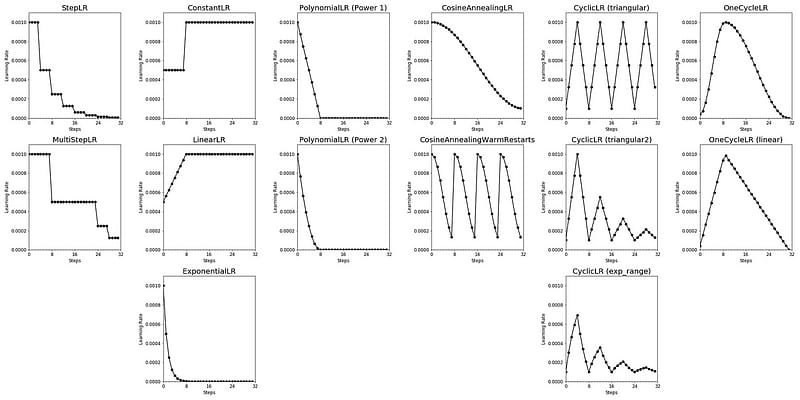

A landmark in statistical multivariate optimization was Optimization by Simulated Annealing (1983). This paper suggested that optimizing complex functions can be done in a way that is conceptually similar to annealing. We can expose the parameters of a model to a high temperature (or learning rate), such that the parameters of the model “melt” into a space of chaos, and then we can lower that temperature to “anneal” the parameters of a model such that it settles on a minima of the loss function.

The annealing schedule may be developed by trial and error for a given problem, or may consist of just warming the system until it is obviously melted, then cooling in slow stages until diffusion of the components ceases. — Kirkpatrick et al.

This idea, as far as I understand, virtually invented the idea of the learning rate scheduler which is widely used today. Of course, various schedulers have been created which deviate from classic metallurgical annealing, but the terms “temperature” and “annealing” are both used heavily in literature to this day.

Follow For More!

I post intuitive and practical articles with both beginner and advanced topics. I write about machine learning, signal processing, development, entrepreneurship, and self help. I think cross-domain knowledge is vital for intuitive understanding, and I want to share some of those cross-domain concepts with the world!

Please like, share, and follow. As an independent author, your support really makes a huge difference!